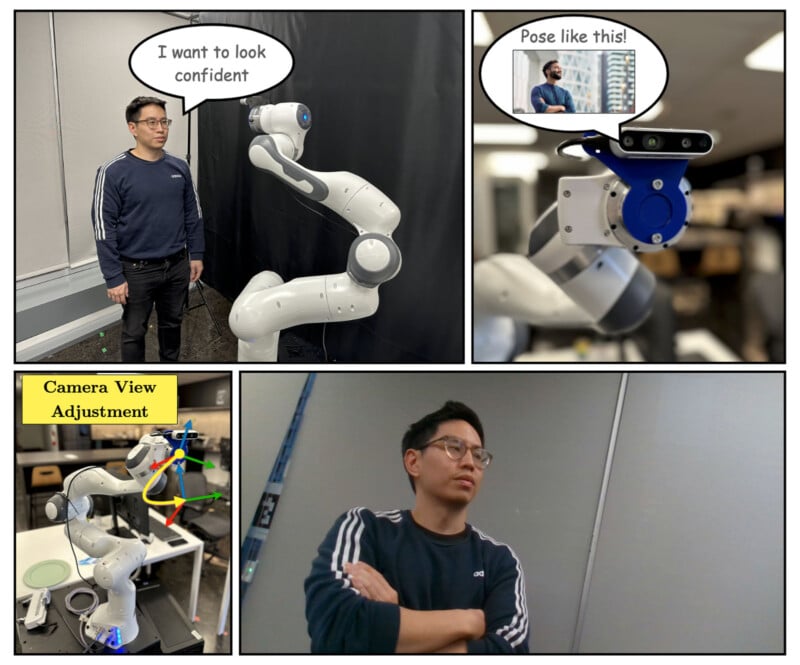

Fig. 1: PhotoBot provides a reference photograph suggestion based on an observation of the scene and a user’s input language query (upper left). The user strikes a pose matching that of the person in the reference photo (upper right) and PhotoBot adjusts its camera accordingly to faithfully capture the layout and composition of the reference image (lower left). The lower-right panel shows an unretouched photograph produced by PhotoBot.

Fig. 1: PhotoBot provides a reference photograph suggestion based on an observation of the scene and a user’s input language query (upper left). The user strikes a pose matching that of the person in the reference photo (upper right) and PhotoBot adjusts its camera accordingly to faithfully capture the layout and composition of the reference image (lower left). The lower-right panel shows an unretouched photograph produced by PhotoBot.Photographers struggling to find the perfect angle for a group shot have often relied upon clumsy tripods, clunky self-timers, or, worst of all, missing out on being in the frame to take the photo themselves. Enter PhotoBot, a robot photographer who promises to capture a good shot and can take instructions and use a reference photo when finding the ideal composition.

“We introduce PhotoBot, a framework for fully automated photo acquisition based on an interplay between high-level human language guidance and a robot photographer,” the researchers explain. “We propose to communicate photography suggestions to the user via reference images that are selected from a curated gallery.”

“It was a really fun project,” PhotoBot co-creator and researcher Oliver Limoyo tells Spectrum IEEE. Limoyo worked on the project while working at Samsung alongside manager and co-author Jimmy Li.

Say cheese! We introduce PhotoBot, a framework for fully automated photo acquisition based on an interplay between high-level human language guidance and a robot photographer. PhD candidate @OliverLimoyo will present this work at #IROS2024!

Paper: https://t.co/DHGFvfOKJf pic.twitter.com/BPrxDkMxlD

— STARS Laboratory (@utiasSTARS) October 3, 2024

Limoyo and Li were already working on a robot that could take pictures when they saw the Getty Image Challenge during COVID lockdowns. This challenge tasked people with recreating their favorite artworks using only three objects they found around their homes. It was a fun, exciting way to keep people engaged and connected during the early days of the pandemic.

Beyond achieving this worthwhile task, Getty’s competition also inspired Limoyo and Li to have their PhotoBot use a reference image to inform its novel photo captures. As Spectrum IEEE explains, they had to then engineer a way for PhotoBot to accurately match a reference photo and adjust its camera to match that image.

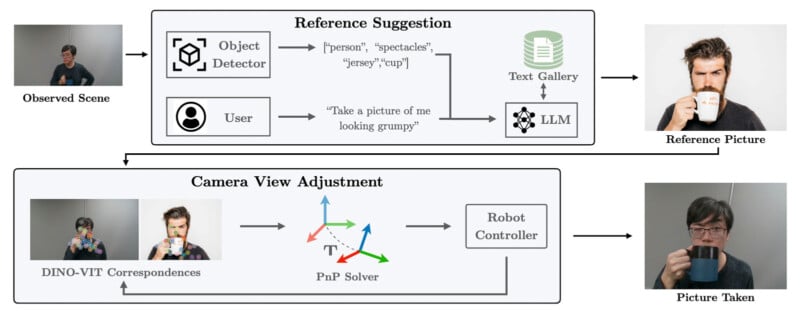

Fig. 2: PhotoBot system diagram. The two main modules are shown: Reference Suggestion and Camera View Adjustment. Given the observed scene and a user query, PhotoBot suggests a reference image to the user and adjusts the camera to take a photo with a similar layout and composition to the reference image.

Fig. 2: PhotoBot system diagram. The two main modules are shown: Reference Suggestion and Camera View Adjustment. Given the observed scene and a user query, PhotoBot suggests a reference image to the user and adjusts the camera to take a photo with a similar layout and composition to the reference image.It is even more sophisticated in practice than it initially sounds. PhotoBot requires a written description of the type of photo a person wants. The robot then analyzes its environment, identifying people and objects within its line of sight. PhotoBot finds similar photos with corresponding labels within its database. Next, a large language model (LLM) compares the user’s text input with the objects around PhotoBot and its database to select appropriate reference photographs.

Suppose a person wants a picture of them looking happy and is surrounded by a few friends, some flowers in a vase, and maybe a pizza. PhotoBot will see all this, label the people and objects, and then find photos within its database that best match the requested photo and include similar components.

Once the user selects the reference shot they like best, PhotoBot will adjust its camera to match the framing and perspective of the reference image. Again, this is a more complex situation than it initially seems, as PhotoBot operates within a three-dimensional space but is trying to match the look of a two-dimensional reference photo.

As for how good PhotoBot is at its job, photographers shouldn’t necessarily panic about the impending reality of a robot photographer. However, PhotoBot did a good job, beating eight humans about two-thirds of the time in terms of respondent preference.

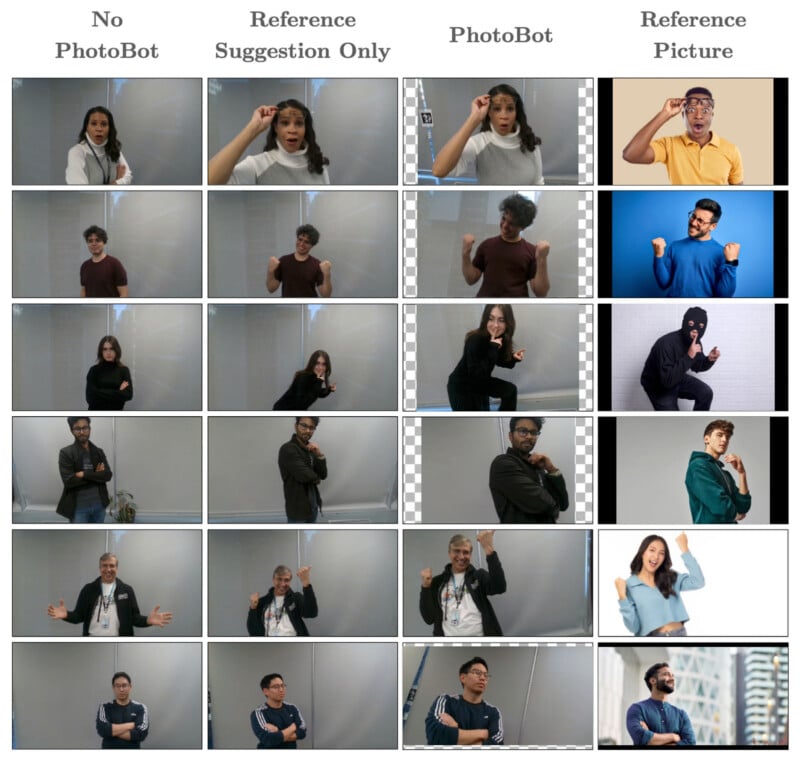

Fig. 5: Sample photos of users evoking various emotions. The user prompts, from top to bottom, are surprised, confident, guilty, confident, happy, and confident. Columns, from left to right, are: user’s own creative posing; user mimicking the suggested reference using a static camera; photo taken by our PhotoBot system; and reference image suggested by PhotoBot. The checkered background indicates cropping. The black background indicates padding of the reference image to facilitate the PnP solution. PhotoBot automatically crops the photos it takes to match the image template.

Fig. 5: Sample photos of users evoking various emotions. The user prompts, from top to bottom, are surprised, confident, guilty, confident, happy, and confident. Columns, from left to right, are: user’s own creative posing; user mimicking the suggested reference using a static camera; photo taken by our PhotoBot system; and reference image suggested by PhotoBot. The checkered background indicates cropping. The black background indicates padding of the reference image to facilitate the PnP solution. PhotoBot automatically crops the photos it takes to match the image template.Li and the rest of the team are no longer working on PhotoBot, but the creator thinks their work has possible implications for smartphone photo assistant apps.

“Imagine right on your phone, you see a reference photo. But you also see what the phone is seeing right now, and then that allows you to move around and align,” Li remarks.

Image credits: Photos from the research paper, ‘PhotoBot: Reference-Guided Interactive Photography via Natural Language,’ by Limoyo, Li, Rivkin, Kelly, and Dudek.

English (US) ·

English (US) ·